Note: This article is Part 2 in a series about using Google Optimize to run a basic A/B test in order to help inform decisions on your website. Check out how use your goals to set up the experiment in Part 1.

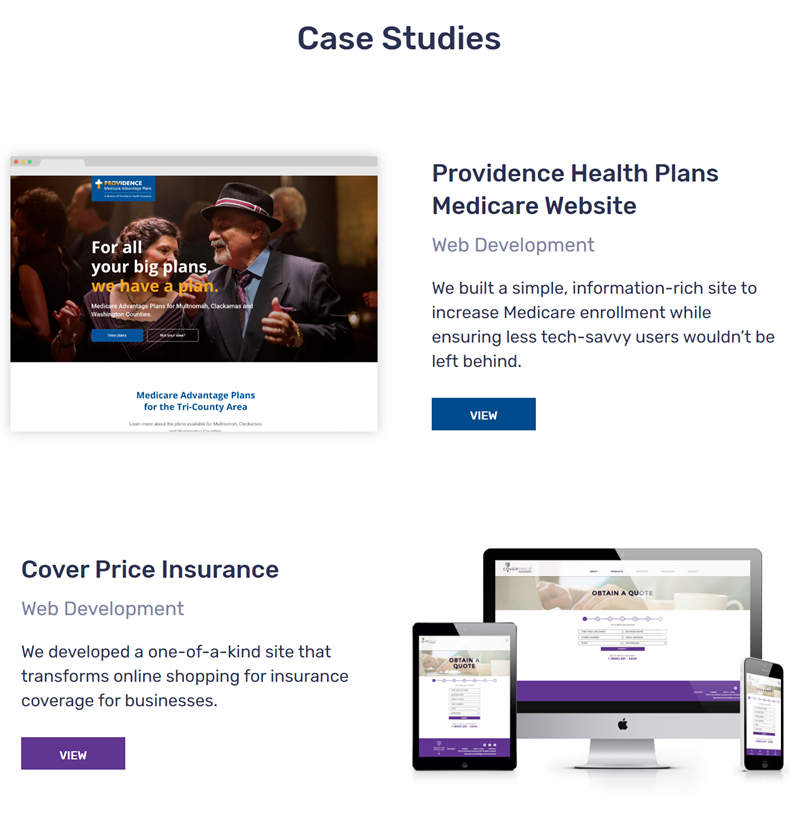

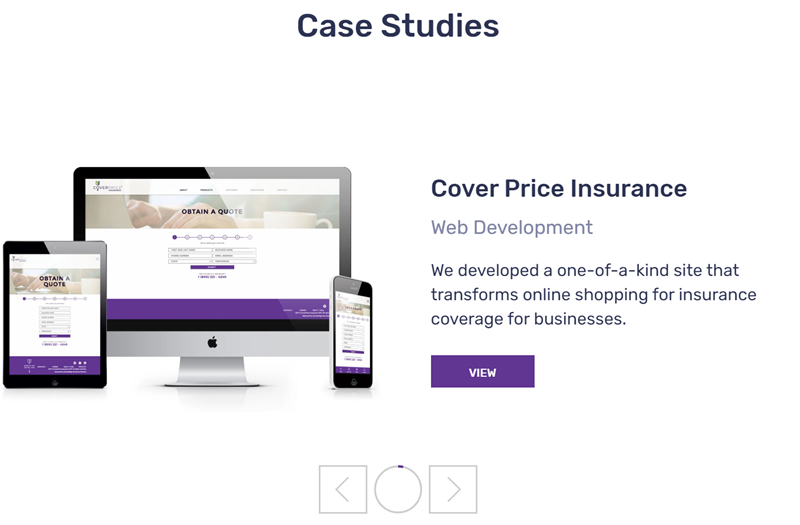

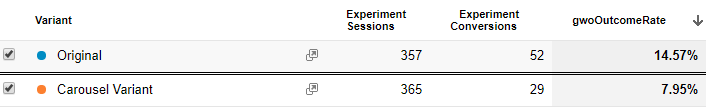

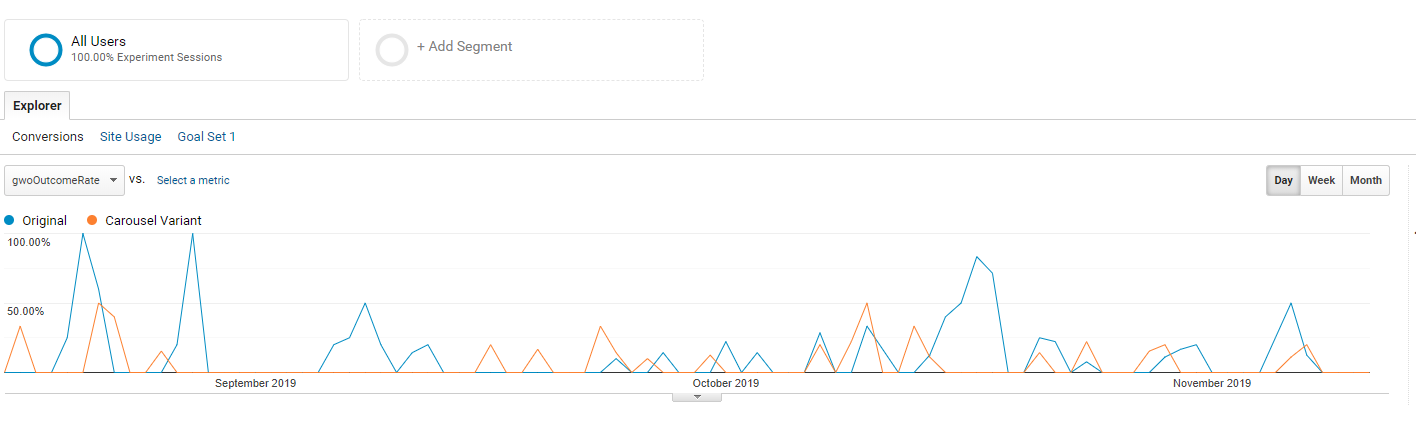

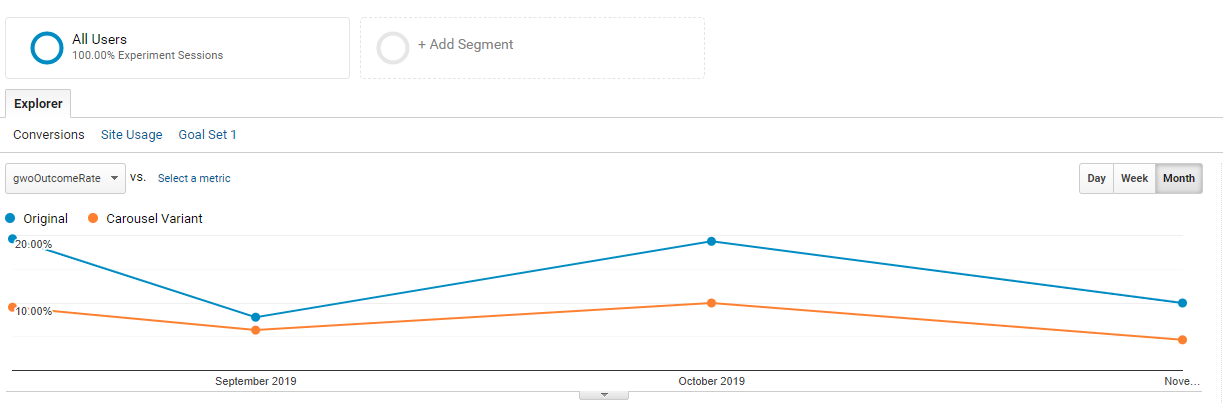

It's been a while since we first set up our experiment to test which version of Data Driven Design's home page resulted in a greater percentage of users traveling to our work section to check out our prior projects. Since then, we've waited as the data has come in, reviewed it and made a choice on which version we wanted to use moving forward. Let's go over how that played out so you can see how to use real data to make decisions about your own website.